For years, engineers have had to pick sides: DRAM for speed, flash for density. Kioxia’s latest prototype shows that trade-off may not hold for much longer. The company has built a 5 TB flash module capable of hitting 64 GB/s bandwidth, putting it in the same conversation as memory technologies normally reserved for servers, but with the efficiency to run at the edge.

For years, engineers have had to pick sides: DRAM for speed, flash for density. Kioxia’s latest prototype shows that trade-off may not hold for much longer. The company has built a 5 TB flash module capable of hitting 64 GB/s bandwidth, putting it in the same conversation as memory technologies normally reserved for servers, but with the efficiency to run at the edge.

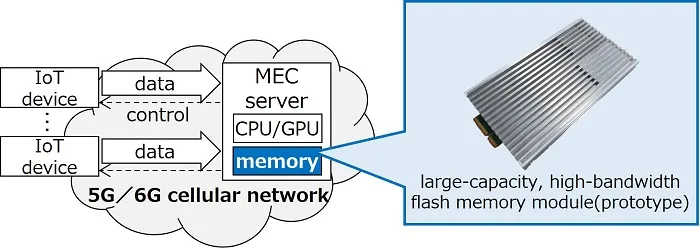

The project was carried out under Japan’s NEDO “Post-5G Information and Communication Systems Infrastructure Enhancement R&D Project,” a national effort to prepare infrastructure for AI-heavy workloads in 5G and 6G networks. With power kept under 40 W, the prototype is already practical enough to start conversations about deployment.

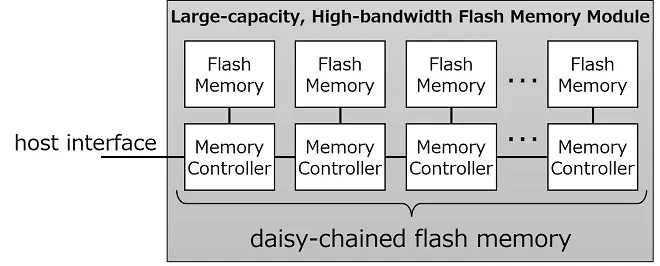

Daisy-Chain Thinking

Instead of wiring controllers and devices into a shared bus, Kioxia has chained them together. Each controller talks to its local flash and then passes data along the line. The result is simple: you can add capacity without choking bandwidth. That’s a key distinction from older flash systems, where scaling up usually meant slowing down.

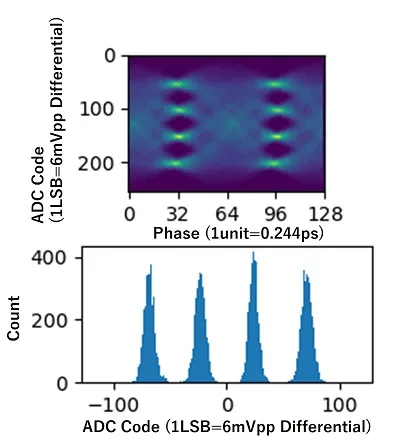

To keep the chain fast, the company used 128 Gbps PAM4 signaling rather than wide parallel buses. This cuts the number of pins while sustaining very high throughput. The measured waveforms show the links holding steady at those speeds, which suggests the design is more than a lab curiosity.

Making Flash Act Faster

Raw flash isn’t known for low latency, so Kioxia layered on techniques to close the gap. A prefetch function brings data forward during sequential reads, while interface tweaks like low-amplitude signaling and distortion correction lift individual channels to 4.0 Gbps. Together, these improvements allow the module to push flash memory closer to the responsiveness engineers expect from DRAM-heavy systems.

At the module level, a PCIe 6.0 host interface ensures compatibility with high-performance servers. In testing, the prototype consistently reached its 64 GB/s bandwidth target while staying within its power budget.

Edge AI in Focus

Why does this matter? Because AI is moving out of the cloud. In 5G and 6G networks, MEC servers are expected to handle more of the work closer to devices, whether that’s generative AI models, IoT data streams, or industrial analytics. Storage at the edge has to be dense, fast, and efficient all at once, and that’s exactly the balance Kioxia is trying to strike.

What Comes Next

Kioxia isn’t calling this a product yet, but the direction is clear. If flash modules like this make it to production, they could give edge servers the ability to run workloads that today are still tied to the data center. For engineers designing around AI, that opens up new options and raises new questions about how far flash can go when pushed beyond its traditional role.